In a previous post I explained the the dreaded diamond dependency problem. In this post I'll look at a solution.

Ever seen an error like when building a package?

Couldn't match expected type `bytestring-0.9.0.4:Data.ByteString.ByteString' against inferred type `ByteString'

Or when configuring a package?

Warning: This package indirectly depends on multiple versions of the same package. This is highly likely to cause a compile failure. package pureMD5-0.1.2 requires bytestring-0.9.0.4 package binary-0.4.1 requires bytestring-0.9.1.0 package cabal2arch-0.3 requires bytestring-0.9.1.0

These failures were pretty common.

So the good news is that the latest release does solve the problem. It contains a completely new package dependency resolution algorithm based on constraint solving.

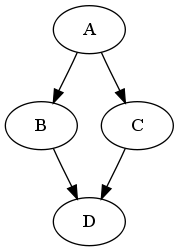

The problem space is this: we've got a bunch of packages, say A, B, C, D.

We've got a bunch of versions of of those packages, let's say:

- A-1.0, A-1.1

- B-1.0

- C-1.0, C-2.0.

- D-1.0, D-1.1

Each package version has dependencies on other packages along with version constraints, for example A-1.0 might need C >= 1 and A-1.1 might need C >= 2. Then given a goal like A >= 1, we have to pick exactly one version of package A and one version of C and so on recursively until we have picked a package version to satisfy each dependency. So even if we can get to a package by following two different dependency paths, we must end up picking the same version. That's what it means to avoids inconsistent dependencies. Of course the version we pick for a package has to satisfy the version constraints of all the packages that depend on it.

The problem space can actually get extremely large. In theory each choice about a package version is independent. In the above example supposing we have to pick a version of A, B, C and D then we have two choices for A, one choice for B and two choices for C and D which gives us 2*1*2*2 combinations overall. It is clear that we cannot explore the entire space of combinations because it's exponential in size. That may not seem important for this example with just 4 packages but there are over 700 packages on hackage and we'd like to be able to install most of them at once. We need to apply some smarts.

Note that this is actually an NP-complete problem. It's possible to encode 3-SAT into the package dependency problem. Fortunately most real instances are fairly easy so we need not give up hope.

Ultimately we have to make choices between versions of a package and we have to end up with a consistent set of choices (or fail). It makes sense therefore to keep track of which versions of which packages are still valid choices. We can make the problem easier if we can eliminate choices that we know would lead to impossible or inconsistent solutions. We can propagate information both up and down the dependency graph to help us eliminate choices.

For example supposing we looked at D-1.0 and D-1.1 and we discovered that D-1.0 could not possibly be built on our operating system but D-1.1 could. If later on we discover we need some version of D then we only have one possibility so we can pick it right away. More interestingly, it might also allow us to eliminate a choice higher up. Supposing for the sake of argument that C-1.0 needed D-1.0 and would not work with D-1.1 then by eliminating D-1.0 we can also eliminate C-1.0.

In the downwards direction, consider the effect of picking A-1.1 rather than A-1.0. We said earlier that A-1.0 needs C >= 1 and A-1.1 might need C >= 2. So making this choice for A eliminates C-1.0.

One class of strategies is to make a series of firm choices and try to build up to a consistent solution. If we do this with no backtracking then we will always run in polynomial time, but there will always be cases where we cannot find solutions even though a solution exists. The chance of us finding a solution in this kind of strategy depends on the order in which we make the choices.

The algorithm

The strategy I've adopted is to make package choices in a top down order. It is a kind of mixture between breadth first and depth first search. We have a set of packages that we know we will need and a set of specific package versions we've already picked. We also maintain a set of constraints on all the packages. This lets us know what versions are available for each package that we've yet to decide about.

In each round we pick a package and choose one version of it and update our set of constraints and if the package we picked depends on a new package then we add that to the pending package set. When there is a package we know we need and there is only one version left then we pick it. That's the easy case.

The hard case is when all remaining packages have at least two versions we could pick. Here we just have to pick something and hope for the best. First of all, we have to decide which package we're going to make a choice for. A good heuristic here is to decide on packages higher up the dependency graph earlier since their dependencies impose constraints on later choices. We can do this by caching the depth first numbering for each package. Once we've decided on which package to go for then we have to decide which version to pick. The most sensible thing is to pick the highest version, however there is a complication with installed packages that I'll come back to later.

Results

So this algorithm produces pretty good results in most cases. It always runs in polynomial time and because of the way it gathers constraints it is guaranteed that if it finds a solution that it will be a valid consistent solution. The old dep solver which would very often come up with inconsistent solutions. Remember: consistent solutions avoid the diamond dependency problem.

Another good thing to gathering constraints is that when we fail we have some information about why we failed because we know which additional constraint made the constraint set inconsistent. We can translate that into a human readable error message, though not necessarily a particularly brilliant one.

By manually adding additional constraints we can also guide the solver to find solutions it would not otherwise find, or to avoid failure. In a sense this is just a hack to work around the fact that the solver isn't even better, but in the mean time it's pretty handy.

You can play around and get a sense of what the algorithm is doing if you run cabal install --dry-run -v pkg1 pkg2 etc and look at the intermediate output and the final solution.

The biggest test I've tried so far is trying to install all of hackage simultaniously. It takes several seconds to find a solution, which is not too bad. I had to discard about 30 packages to arrive at a consistent set. Adding any one of the discarded ones back leads to inconsitent dependencies, for example conflicts over which version of HaXml is required. There are also many packages that simply cannot ever be installed because they have missing dependencies.

Installed packages

So what about installed packages? I mentioned that there's a complication there. We often have to decide between picking an already installed version of a package and the available one from hackage (even if they are the same version). If we pick the installed version then we immediately pick up a lot of precise constraints, because the installed package depends on exact versions. That is often enough to prevent us from finding a solution. Indeed in the diamond dependency examples I gave previously it was clear that to find a consistent solution we would have to rebuild some existing installed package. So we cannot default to preferring installed packages. A simple observation is that the available package always has looser constraints than the installed version, so it's always safe to pick the available version over the installed version. We can always 'improve' our solution at the end if we find the constraints we end up with are consistent with the constraints of the installed version. And that is what we do. There is a further issue however. If ultimately we would like the option to use the installed version then we had better pick the same version of the available package and not just the highest available version. What we do is allow a per-package preference for the highest available version or the highest available version that has a corresponding installed version. For the 'install' command we prefer the latest version for the packages explicitly given and the installed version for all others. For the 'upgrade' command we prefer the latest version everywhere.

That seems to work pretty well, though I've found people do get somewhat confused when cabal-install decides to re-install a package they already have. What is not clear to them is that it is rebuilding a package against different versions of its dependencies. Probably the thing to do is to make that clearer in the output of cabal install --dry-run.

Improvements

There are still plenty of improvements that could be made. The algorithm currently does not deal well with installing packages for older compilers because it finds out too late that a package it choose could only work with a newer version of the base library. The solution there is some bottom up constraint propagation. We should check at the beginning that each package version could possibly be installed with the current compiler and if not eliminate it. There are still real cases where we end up with an avoidable conflict. For example we might have two packages that need HaXml, lets say Foo needs HaXml =1.13.* while there are two versions of Bar, one that works with HaXml =1.13.* and a later version that needs HaXml >= 1.16. If we end up picking Bar before Foo then we would default to the later version of HaXml but then fail when we find Foo needs the earlier version. This is not solved by the ordering heuristic because Foo and Bar do not depend on each other. This is an example where we need either more look ahead, backtracking or a much more sophisticated algorithm in general.

The right long term approach I think is a proper complete constraint solving algorithm.